Information

- Publication Type: PhD-Thesis

- Workgroup(s)/Project(s):

- Date: April 2010

- Date (Start): January 2005

- Date (End): April 2010

- 1st Reviewer: Michael Wimmer

- 2nd Reviewer: Dinesh Manocha

- Rigorosum: 27. April 2010

- First Supervisor: Michael Wimmer

- Keywords: 3D rendering, real-time rendering, ambient occlusion, visibility, occlusion culling

Abstract

Visibility computations are essential operations in computer graphics, which

are required for rendering acceleration in the form of visibility culling, as well as for computing realistic lighting. Visibility culling, which is the main focus of this thesis, aims to provide output sensitivity by sending only visible primitives to the hardware. Regardless of the rapid development of graphics hardware, it is of crucial importance for many applications like game development or architectural design, as the demands on the hardware regarding scene complexity increase accordingly. Solving the visibility problem has been an important research topic for many years, and countless methods have been proposed. Interestingly, there are

still open research problems up to this day, and many algorithms are either impractical or only usable for specific scene configurations, preventing their widespread use. Visibility culling algorithms can be separated into algorithms for visibility preprocessing and online occlusion culling. Visibility computations are also required to solve complex lighting interactions in the scene, ranging from soft and hard shadows to ambient occlusion and full fledged global illumination. It is a big challenge to answer hundreds or thousands of visibility queries within a fraction

of a second in order to reach real-time frame rates, which is one goal that we want to achieve in this thesis. The contribution of this thesis are four novel algorithms that provide solutions

for efficient visibility interactions in order to achieve high-quality output-sensitive real-time rendering, and are general in the sense that they work with any kind of 3D scene configuration. First we present two methods dealing with the issue of

automatically partitioning view space and object space into useful entities that are optimal for the subsequent visibility computations. Amazingly, this problem area was mostly ignored despite its importance, and view cells are mostly tweaked by hand in practice in order to reach optimal performance – a very time consuming task. The first algorithm specifically deals with the creation of an optimal view space partition into view cells using a cost heuristics and sparse visibility sampling. The second algorithm extends this approach to optimize both view space subdivision and object space subdivision simultaneously. Next we present a hierarchical online culling algorithm that eliminates most limitations of previous approaches, and is rendering engine friendly in the sense that it allows easy integration

and efficient material sorting. It reduces the main problem of previous

algorithms – the overhead due to many costly state changes and redundant hardware occlusion queries – to a minimum, obtaining up to three times speedup over previous work. At last we present an ambient occlusion algorithm which works in screen space, and show that high-quality shading with effectively hundreds of samples per pixel is possible in real time for both static and dynamic scenes by utilizing temporal coherence to reuse samples from previous frames.

Additional Files and Images

Additional images and videos

Additional files

Weblinks

No further information available.

BibTeX

@phdthesis{Mattausch-2010-vcr,

title = "Visibility Computations for Real-Time Rendering in General

3D Environments",

author = "Oliver Mattausch",

year = "2010",

abstract = "Visibility computations are essential operations in computer

graphics, which are required for rendering acceleration in

the form of visibility culling, as well as for computing

realistic lighting. Visibility culling, which is the main

focus of this thesis, aims to provide output sensitivity by

sending only visible primitives to the hardware. Regardless

of the rapid development of graphics hardware, it is of

crucial importance for many applications like game

development or architectural design, as the demands on the

hardware regarding scene complexity increase accordingly.

Solving the visibility problem has been an important

research topic for many years, and countless methods have

been proposed. Interestingly, there are still open research

problems up to this day, and many algorithms are either

impractical or only usable for specific scene

configurations, preventing their widespread use. Visibility

culling algorithms can be separated into algorithms for

visibility preprocessing and online occlusion culling.

Visibility computations are also required to solve complex

lighting interactions in the scene, ranging from soft and

hard shadows to ambient occlusion and full fledged global

illumination. It is a big challenge to answer hundreds or

thousands of visibility queries within a fraction of a

second in order to reach real-time frame rates, which is one

goal that we want to achieve in this thesis. The

contribution of this thesis are four novel algorithms that

provide solutions for efficient visibility interactions in

order to achieve high-quality output-sensitive real-time

rendering, and are general in the sense that they work with

any kind of 3D scene configuration. First we present two

methods dealing with the issue of automatically partitioning

view space and object space into useful entities that are

optimal for the subsequent visibility computations.

Amazingly, this problem area was mostly ignored despite its

importance, and view cells are mostly tweaked by hand in

practice in order to reach optimal performance – a very

time consuming task. The first algorithm specifically deals

with the creation of an optimal view space partition into

view cells using a cost heuristics and sparse visibility

sampling. The second algorithm extends this approach to

optimize both view space subdivision and object space

subdivision simultaneously. Next we present a hierarchical

online culling algorithm that eliminates most limitations of

previous approaches, and is rendering engine friendly in the

sense that it allows easy integration and efficient material

sorting. It reduces the main problem of previous algorithms

– the overhead due to many costly state changes and

redundant hardware occlusion queries – to a minimum,

obtaining up to three times speedup over previous work. At

last we present an ambient occlusion algorithm which works

in screen space, and show that high-quality shading with

effectively hundreds of samples per pixel is possible in

real time for both static and dynamic scenes by utilizing

temporal coherence to reuse samples from previous frames.",

month = apr,

address = "Favoritenstrasse 9-11/E193-02, A-1040 Vienna, Austria",

school = "Institute of Computer Graphics and Algorithms, Vienna

University of Technology ",

keywords = "3D rendering, real-time rendering, ambient occlusion,

visibility, occlusion culling",

URL = "https://www.cg.tuwien.ac.at/research/publications/2010/Mattausch-2010-vcr/",

}

buddha:

temporal ssao on happy buddha

buddha:

temporal ssao on happy buddha

convergence:

temporal ssao convergence on animated character

convergence:

temporal ssao convergence on animated character

engine:

Our engine combines occlusion culling + SSAO + shadows

engine:

Our engine combines occlusion culling + SSAO + shadows

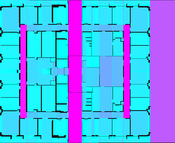

image-viewcells-bsp:

render cost visualization (bsp)

image-viewcells-bsp:

render cost visualization (bsp)

image-viewcells-vsp:

render cost visualization (our method)

image-viewcells-vsp:

render cost visualization (our method)

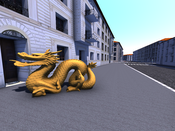

image:

temporal ssao on stanford dragon

image:

temporal ssao on stanford dragon

vienna:

snapshot in vienna

vienna:

snapshot in vienna

phd

phd