Information

- Publication Type: Journal Paper (without talk)

- Workgroup(s)/Project(s):

- Date: February 2016

- DOI: 10.1109/TVCG.2015.2430333

- ISSN: 1077-2626

- Journal: IEEE Transactions on Visualization & Computer Graphics

- Number: 2

- Volume: 22

- Pages: 1127 – 1137

Abstract

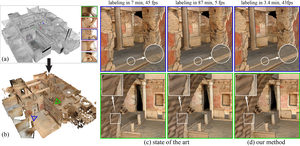

With the enormous advances of the acquisition technology over the last years, fast processing and high-quality visualization of large point clouds have gained increasing attention. Commonly, a mesh surface is reconstructed from the point cloud and a high-resolution texture is generated over the mesh from the images taken at the site to represent surface materials. However, this global reconstruction and texturing approach becomes impractical with increasing data sizes. Recently, due to its potential for scalability and extensibility, a method for texturing a set of depth maps in a preprocessing and stitching them at runtime has been proposed to represent large scenes. However, the rendering performance of this method is strongly dependent on the number of depth maps and their resolution. Moreover, for the proposed scene representation, every single depth map has to be textured by the images, which in practice heavily increases processing costs. In this paper, we present a novel method to break these dependencies by introducing an efficient raytracing of multiple depth maps. In a preprocessing phase, we first generate high-resolution textured depth maps by rendering the input points from image cameras and then perform a graph-cut based optimization to assign a small subset of these points to the images. At runtime, we use the resulting point-to-image assignments (1) to identify for each view ray which depth map contains the closest ray-surface intersection and (2) to efficiently compute this intersection point. The resulting algorithm accelerates both the texturing and the rendering of the depth maps by an order of magnitude.Additional Files and Images

Weblinks

BibTeX

@article{arikan-2015-dmrt,

title = "Multi-Depth-Map Raytracing for Efficient Large-Scene

Reconstruction",

author = "Murat Arikan and Reinhold Preiner and Michael Wimmer",

year = "2016",

abstract = "With the enormous advances of the acquisition technology

over the last years, fast processing and high-quality

visualization of large point clouds have gained increasing

attention. Commonly, a mesh surface is reconstructed from

the point cloud and a high-resolution texture is generated

over the mesh from the images taken at the site to represent

surface materials. However, this global reconstruction and

texturing approach becomes impractical with increasing data

sizes. Recently, due to its potential for scalability and

extensibility, a method for texturing a set of depth maps in

a preprocessing and stitching them at runtime has been

proposed to represent large scenes. However, the rendering

performance of this method is strongly dependent on the

number of depth maps and their resolution. Moreover, for the

proposed scene representation, every single depth map has to

be textured by the images, which in practice heavily

increases processing costs. In this paper, we present a

novel method to break these dependencies by introducing an

efficient raytracing of multiple depth maps. In a

preprocessing phase, we first generate high-resolution

textured depth maps by rendering the input points from image

cameras and then perform a graph-cut based optimization to

assign a small subset of these points to the images. At

runtime, we use the resulting point-to-image assignments (1)

to identify for each view ray which depth map contains the

closest ray-surface intersection and (2) to efficiently

compute this intersection point. The resulting algorithm

accelerates both the texturing and the rendering of the

depth maps by an order of magnitude.",

month = feb,

doi = "10.1109/TVCG.2015.2430333",

issn = "1077-2626",

journal = "IEEE Transactions on Visualization & Computer Graphics",

number = "2",

volume = "22",

pages = "1127--1137",

URL = "https://www.cg.tuwien.ac.at/research/publications/2016/arikan-2015-dmrt/",

}

draft

draft image

image

![video: [45MB]](https://www.cg.tuwien.ac.at/research/publications/2016/arikan-2015-dmrt/arikan-2015-dmrt-video:thumb175.png)