Information

- Publication Type: PhD-Thesis

- Workgroup(s)/Project(s): not specified

- Date: December 2008

- Date (End): 2008

- TU Wien Library:

- Second Supervisor: Eduardo Romero

- Rigorosum: 20. February 2008

- First Supervisor: Michael Wimmer

Abstract

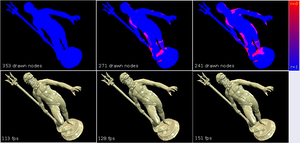

In order to achieve interactive rendering of complex models comprising several millions of polygons, the amount of processed data has to be substantially reduced. Level-ofdetail (LOD) methods allow the amount of data sent to the GPU to be aggressively reduced at the expense of sacrificing image quality. Hierarchical level-of-detail (HLOD) methods have proved particularly capable of interactive visualisation of huge data sets by precomputing levels-of-detail at different levels of a spatial hierarchy. HLODs support out-of-core algorithms in a straightforward way and allow an optimal balance between CPU and GPU load during rendering. Occlusion culling represents an orthogonal approach for reducing the amount of rendered primitives. Occlusion culling methods aim to quickly cull the invisible part of the model and render only its visible part. Most recent methods use hardware occlusion queries (HOQs) to achieve this task. The effects of HLODs and occlusion culling can be successfully combined. Firstly, nodes which are completely invisible can be culled. Secondly, HOQ results can be used for visible nodes when refining an HLOD model; according to the degree of visibility of a node and the visual masking perceptual phenomenon, then it could be determined that there would be no gain in the final appearance of the image obtained if the node were further refined. In the latter case, HOQs allow more aggressive culling of the HLOD hierarchy, further reducing the amount of rendered primitives. However, due to the latency between issuing an HOQ and the availability of its result, the direct use of HOQs for refinement criteria cause CPU stalls and GPU starvation. This thesis introduces a novel error metric, taking visibility information (gathered from HOQs) as an integral part of refining an HLOD model, this being the first approach within this context to the best of our knowledge. A novel traversal algorithm for HLOD refinement is also presented for taking full advantage of the introduced HOQ-based error metric. The algorithm minimises CPU stalls and GPU starvation by predicting HLOD refinement conditions using spatio-temporal coherence of visibility. Some properties of the combined approach presented here involve improved performance having the same visual quality (whilst our occlusion culling technique still remained conservative). Our error metric supports both polygon-based and point-based HLODs, ensuring full use of HOQ results (our error metrics take full advantage of the information gathered in HOQs). Our traversal algorithm makes full use of the spatial and temporal coherency inherent in hierarchical representations. Our approach can be straightforwardly implemented.Additional Files and Images

Weblinks

No further information available.BibTeX

@phdthesis{charalambos-thesis_hlod,

title = "HLOD Refinement Driven by Hardware Occlusion Queries",

author = "Jean Pierre Charalambos",

year = "2008",

abstract = "In order to achieve interactive rendering of complex models

comprising several millions of polygons, the amount of

processed data has to be substantially reduced.

Level-ofdetail (LOD) methods allow the amount of data sent

to the GPU to be aggressively reduced at the expense of

sacrificing image quality. Hierarchical level-of-detail

(HLOD) methods have proved particularly capable of

interactive visualisation of huge data sets by precomputing

levels-of-detail at different levels of a spatial hierarchy.

HLODs support out-of-core algorithms in a straightforward

way and allow an optimal balance between CPU and GPU load

during rendering. Occlusion culling represents an orthogonal

approach for reducing the amount of rendered primitives.

Occlusion culling methods aim to quickly cull the invisible

part of the model and render only its visible part. Most

recent methods use hardware occlusion queries (HOQs) to

achieve this task. The effects of HLODs and occlusion

culling can be successfully combined. Firstly, nodes which

are completely invisible can be culled. Secondly, HOQ

results can be used for visible nodes when refining an HLOD

model; according to the degree of visibility of a node and

the visual masking perceptual phenomenon, then it could be

determined that there would be no gain in the final

appearance of the image obtained if the node were further

refined. In the latter case, HOQs allow more aggressive

culling of the HLOD hierarchy, further reducing the amount

of rendered primitives. However, due to the latency between

issuing an HOQ and the availability of its result, the

direct use of HOQs for refinement criteria cause CPU stalls

and GPU starvation. This thesis introduces a novel error

metric, taking visibility information (gathered from HOQs)

as an integral part of refining an HLOD model, this being

the first approach within this context to the best of our

knowledge. A novel traversal algorithm for HLOD refinement

is also presented for taking full advantage of the

introduced HOQ-based error metric. The algorithm minimises

CPU stalls and GPU starvation by predicting HLOD refinement

conditions using spatio-temporal coherence of visibility.

Some properties of the combined approach presented here

involve improved performance having the same visual quality

(whilst our occlusion culling technique still remained

conservative). Our error metric supports both polygon-based

and point-based HLODs, ensuring full use of HOQ results (our

error metrics take full advantage of the information

gathered in HOQs). Our traversal algorithm makes full use of

the spatial and temporal coherency inherent in hierarchical

representations. Our approach can be straightforwardly

implemented.",

month = dec,

address = "Favoritenstrasse 9-11/E193-02, A-1040 Vienna, Austria",

school = "Institute of Computer Graphics and Algorithms, Vienna

University of Technology ",

URL = "https://www.cg.tuwien.ac.at/research/publications/2008/charalambos-thesis_hlod/",

}

paper

paper