Information

- Publication Type: Conference Paper

- Workgroup(s)/Project(s):

- Date: March 2019

- Publisher: IEEE

- Location: Osaka, Japan

- Lecturer: Markus Schütz

- Event: IEEE VR 2019, the 26th IEEE Conference on Virtual Reality and 3D User Interfaces

- DOI: 10.1109/VR.2019.8798284

- Call for Papers: Call for Paper

- Booktitle: 2019 IEEE Conference on Virtual Reality and 3D User Interfaces

- Conference date: 23. March 2019 – 27. March 2019

- Pages: 103 – 110

- Keywords: point clouds, virtual reality, VR

Abstract

Real-time rendering of large point clouds requires acceleration structures that reduce the number of points drawn on screen. State-of-the art algorithms group and render points in hierarchically organized chunks with varying extent and density, which results in sudden changes of density from one level of detail to another, as well as noticeable popping artifacts when additional chunks are blended in or out. These popping artifacts are especially noticeable at lower levels of detail, and consequently in virtual reality, where high performance requirements impose a reduction in detail.We propose a continuous level-of-detail method that exhibits gradual rather than sudden changes in density. Our method continuously recreates a down-sampled vertex buffer from the full point cloud, based on camera orientation, position, and distance to the camera, in a point-wise rather than chunk-wise fashion and at speeds up to 17 million points per millisecond. As a result, additional details are blended in or out in a less noticeable and significantly less irritating manner as compared to the state of the art. The improved acceptance of our method was successfully evaluated in a user study.

Additional Files and Images

Additional images and videos

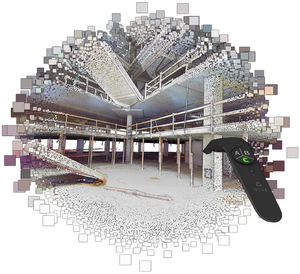

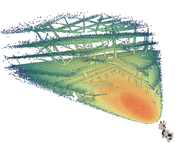

birds_eye:

continuous LOD subsample selected for the users current viewpoint

birds_eye:

continuous LOD subsample selected for the users current viewpoint

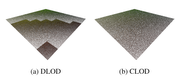

distribution:

More uniform distribution of points in screen space, compared to staircase artifacts from discrete LOD methods.

distribution:

More uniform distribution of points in screen space, compared to staircase artifacts from discrete LOD methods.

Additional files

Weblinks

BibTeX

@inproceedings{schuetz-2019-CLOD,

title = "Real-Time Continuous Level of Detail Rendering of Point

Clouds",

author = "Markus Sch\"{u}tz and Katharina Kr\"{o}sl and Michael Wimmer",

year = "2019",

abstract = "Real-time rendering of large point clouds requires

acceleration structures that reduce the number of points

drawn on screen. State-of-the art algorithms group and

render points in hierarchically organized chunks with

varying extent and density, which results in sudden changes

of density from one level of detail to another, as well as

noticeable popping artifacts when additional chunks are

blended in or out. These popping artifacts are especially

noticeable at lower levels of detail, and consequently in

virtual reality, where high performance requirements impose

a reduction in detail. We propose a continuous

level-of-detail method that exhibits gradual rather than

sudden changes in density. Our method continuously recreates

a down-sampled vertex buffer from the full point cloud,

based on camera orientation, position, and distance to the

camera, in a point-wise rather than chunk-wise fashion and

at speeds up to 17 million points per millisecond. As a

result, additional details are blended in or out in a less

noticeable and significantly less irritating manner as

compared to the state of the art. The improved acceptance of

our method was successfully evaluated in a user study.",

month = mar,

publisher = "IEEE",

location = "Osaka, Japan",

event = "IEEE VR 2019, the 26th IEEE Conference on Virtual Reality

and 3D User Interfaces",

doi = "10.1109/VR.2019.8798284",

booktitle = "2019 IEEE Conference on Virtual Reality and 3D User

Interfaces",

pages = "103--110",

keywords = "point clouds, virtual reality, VR",

URL = "https://www.cg.tuwien.ac.at/research/publications/2019/schuetz-2019-CLOD/",

}