Information

- Publication Type: Master Thesis

- Workgroup(s)/Project(s):

- Date: November 2012

- First Supervisor:

Abstract

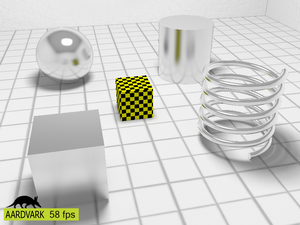

Interactive walkthroughs of virtual scenes are not only common in fictional settings such as entertainment and video games, but also a popular way of presenting novel architecture, furnishings or illumination. Due to the high performance requirements of such interactive applications, the presentable detail and quality are limited by the computational hardware. A realistic appearance of materials is one of the most crucial aspects to scene immersion during walkthroughs, and computing it at interactive frame rates is a challenging task.In this thesis an algorithm is presented that achieves the rendering of static scenes featuring view-dependent materials in real-time. For walkthroughs of static scenes, all light propagation but the last view-dependent bounce can be precomputed and stored as diffuse irradiance light maps together with the scene geometry. The specular part of reflection and transmission is then computed dynamically by integrating the incident light approximatively according to view and local material properties. For this purpose, the incident radiance distribution of each object is approximated by a single static environment map that is obtained by rendering the light-mapped scene as seen from the object. For large planar reflectors, a mirror rendering is performed every frame to approximate the incident light distribution instead of a static environment map. Materials are represented using a parametric model that is particularly suitable for fitting to measured reflectance data. Fitting the parameters of a compact model to material measurements provides a straightforward approach of reproducing light interactions of real-world substances on a screen.

During walkthroughs, the view-dependent part of the local illumination integral is approximated by sampling the representation of incident light while weighting the samples according to the material properties. Noise-free rendering is achieved by reusing the exact same sampling pattern at all pixels of a shaded object, and by filtering the samples using MIP-maps of the incident light representation. All available samples are regularly placed within the specular lobe to achieve a uniform symmetric coverage of the most important part of the integration domain even when using very few (5-20) samples. Thus, the proposed algorithm achieves a biased but stable and convincing material appearance at real-time frame rates. It is faster than existing random-based sampling algorithms, as fewer samples suffice to achieve a smooth and uniform coverage of specular lobes.

Additional Files and Images

Weblinks

No further information available.BibTeX

@mastersthesis{Muehlbacher_2012_RRM,

title = "Real-Time Rendering of Measured Materials",

author = "Thomas M\"{u}hlbacher",

year = "2012",

abstract = "Interactive walkthroughs of virtual scenes are not only

common in fictional settings such as entertainment and video

games, but also a popular way of presenting novel

architecture, furnishings or illumination. Due to the high

performance requirements of such interactive applications,

the presentable detail and quality are limited by the

computational hardware. A realistic appearance of materials

is one of the most crucial aspects to scene immersion during

walkthroughs, and computing it at interactive frame rates is

a challenging task. In this thesis an algorithm is

presented that achieves the rendering of static scenes

featuring view-dependent materials in real-time. For

walkthroughs of static scenes, all light propagation but the

last view-dependent bounce can be precomputed and stored as

diffuse irradiance light maps together with the scene

geometry. The specular part of reflection and transmission

is then computed dynamically by integrating the incident

light approximatively according to view and local material

properties. For this purpose, the incident radiance

distribution of each object is approximated by a single

static environment map that is obtained by rendering the

light-mapped scene as seen from the object. For large planar

reflectors, a mirror rendering is performed every frame to

approximate the incident light distribution instead of a

static environment map. Materials are represented using a

parametric model that is particularly suitable for fitting

to measured reflectance data. Fitting the parameters of a

compact model to material measurements provides a

straightforward approach of reproducing light interactions

of real-world substances on a screen. During walkthroughs,

the view-dependent part of the local illumination integral

is approximated by sampling the representation of incident

light while weighting the samples according to the material

properties. Noise-free rendering is achieved by reusing the

exact same sampling pattern at all pixels of a shaded

object, and by filtering the samples using MIP-maps of the

incident light representation. All available samples are

regularly placed within the specular lobe to achieve a

uniform symmetric coverage of the most important part of the

integration domain even when using very few (5-20) samples.

Thus, the proposed algorithm achieves a biased but stable

and convincing material appearance at real-time frame rates.

It is faster than existing random-based sampling algorithms,

as fewer samples suffice to achieve a smooth and uniform

coverage of specular lobes.",

month = nov,

address = "Favoritenstrasse 9-11/E193-02, A-1040 Vienna, Austria",

school = "Institute of Computer Graphics and Algorithms, Vienna

University of Technology ",

URL = "https://www.cg.tuwien.ac.at/research/publications/2012/Muehlbacher_2012_RRM/",

}

Poster

Poster Thesis

Thesis