Show images of current Projects | Years: 2020 - 2021 - 2022.

VRVis Competence Center

The VRVis K1 Research Center is the leading application oriented research center in the area of virtual reality (VR) and visualization (Vis) in Austria and is internationally recognized. You can find extensive Information about the VRVis-Center here

X-Mas Cards

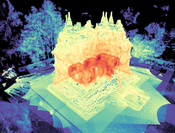

Every year a christmas card showing aspects of our research projects is produced and sent out.Modeling the World at Scale

Vision: reconstruct a model of the world that permits online level-of-detail extraction.Photogrammetry made easy

Superhumans - Walking Through Walls

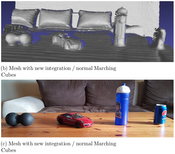

In recent years, virtual and augmented reality have gained widespread attention because of newly developed head-mounted displays. For the first time, mass-market penetration seems plausible. Also, range sensors are on the verge of being integrated into smartphones, evidenced by prototypes such as the Google Tango device, making ubiquitous on-line acquisition of 3D data a possibility. The combination of these two technologies – displays and sensors – promises applications where users can directly be immersed into an experience of 3D data that was just captured live. However, the captured data needs to be processed and structured before being displayed. For example, sensor noise needs to be removed, normals need to be estimated for local surface reconstruction, etc. The challenge is that these operations involve a large amount of data, and in order to ensure a lag-free user experience, they need to be performed in real time, i.e., in just a few milliseconds per frame. In this proposal, we exploit the fact that dynamic point clouds captured in real time are often only relevant for display and interaction in the current frame and inside the current view frustum. In particular, we propose a new view-dependent data structure that permits efficient connectivity creation and traversal of unstructured data, which will speed up surface recovery, e.g. for collision detection. Classifying occlusions comes at no extra cost, which will allow quick access to occluded layers in the current view. This enables new methods to explore and manipulate dynamic 3D scenes, overcoming interaction methods that rely on physics-based metaphors like walking or flying, lifting interaction with 3D environments to a “superhuman” level.

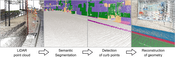

Efficient workflow transforming large 3D point clouds to Building Information Models with user-assisted automatization

Smart Communities and Technologies: 3D Spatialization

The Research Cluster "Smart Communities and Technologies" (Smart CT) at TU Wien will provide the scientific underpinnings for next-generation complex smart city and communities infrastructures. Cities are ever-evolving, complex cyber physical systems of systems covering a magnitude of different areas. The initial concept of smart cities and communities started with cities utilizing communication technologies to deliver services to their citizens and evolved to using information technology to be smarter and more efficient about the utilization of their resources. In recent years however, information technology has changed significantly, and with it the resources and areas addressable by a smart city have broadened considerably. They now cover areas like smart buildings, smart products and production, smart traffic systems and roads, autonomous driving, smart grids for managing energy hubs and electric car utilization or urban environmental systems research.

3D spatialization creates the link between the internet of cities infrastructure and the actual 3D world in which a city is embedded in order to perform advanced computation and visualization tasks. Sensors, actuators and users are embedded in a complex 3D environment that is constantly changing. Acquiring, modeling and visualizing this dynamic 3D environment are the challenges we need to face using methods from Visual Computing and Computer Graphics. 3D Spatialization aims to make a city aware of its 3D environment, allowing it to perform spatial reasoning to solve problems like visibility, accessibility, lighting, and energy efficiency.