Information

- Publication Type: Journal Paper with Conference Talk

- Workgroup(s)/Project(s): not specified

- Date: December 2022

- Journal: ACM Transactions on Graphics

- Volume: 41

- Number: 6

- Location: Daegu

- Lecturer: Jozef Hladky

- ISSN: 1557-7368

- Event: Siggraph Asia

- Call for Papers: Call for Paper

- Publisher: ASSOC COMPUTING MACHINERY

- Conference date: 6. December 2022 – 9. December 2022

- Keywords: streaming, real-time rendering, virtual reality

Abstract

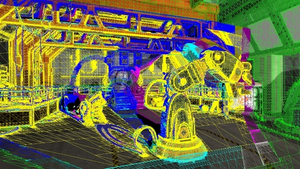

Cloud rendering is attractive when targeting thin client devices such as phones or VR/AR headsets, or any situation where a high-end GPU is not available due to thermal or power constraints. However, it introduces the challenge of streaming rendered data over a network in a manner that is robust to latency and potential dropouts. Current approaches range from streaming transmitted video and correcting it on the client---which fails in the presence of disocclusion events---to solutions where the server sends geometry and all rendering is performed on the client. To balance the competing goals of disocclusion robustness and minimal client workload, we introduce QuadStream, a new streaming technique that reduces motion-to-photon latency by allowing clients to render novel views on the fly and is robust against disocclusions. Our key idea is to transmit an approximate geometric scene representation to the client which is independent of the source geometry and can render both the current view frame and nearby adjacent views. Motivated by traditional macroblock approaches to video codec design, we decompose the scene seen from positions in a view cell into a series of view-aligned quads from multiple views, or QuadProxies. By operating on a rasterized G-Buffer, our approach is independent of the representation used for the scene itself. Our technical contributions are an efficient parallel quad generation, merging, and packing strategy for proxy views that cover potential client movement in a scene; a packing and encoding strategy allowing masked quads with depth information to be transmitted as a frame coherent stream; and an efficient rendering approach that takes advantage of modern hardware capabilities to turn our QuadStream representation into complete novel views on thin clients. According to our experiments, our approach achieves superior quality compared both to streaming methods that rely on simple video data and to geometry-based streaming.Additional Files and Images

Weblinks

BibTeX

@article{hladky-2022-QS,

title = "QuadStream: A Quad-Based Scene Streaming Architecture for

Novel Viewpoint Reconstruction",

author = "Jozef Hladky and Michael Stengel and Nicholas Vining and

Bernhard Kerbl and Hans-Peter Seidel and Markus Steinberger",

year = "2022",

abstract = "Cloud rendering is attractive when targeting thin client

devices such as phones or VR/AR headsets, or any situation

where a high-end GPU is not available due to thermal or

power constraints. However, it introduces the challenge of

streaming rendered data over a network in a manner that is

robust to latency and potential dropouts. Current approaches

range from streaming transmitted video and correcting it on

the client---which fails in the presence of disocclusion

events---to solutions where the server sends geometry and

all rendering is performed on the client. To balance the

competing goals of disocclusion robustness and minimal

client workload, we introduce QuadStream, a new streaming

technique that reduces motion-to-photon latency by allowing

clients to render novel views on the fly and is robust

against disocclusions. Our key idea is to transmit an

approximate geometric scene representation to the client

which is independent of the source geometry and can render

both the current view frame and nearby adjacent views.

Motivated by traditional macroblock approaches to video

codec design, we decompose the scene seen from positions in

a view cell into a series of view-aligned quads from

multiple views, or QuadProxies. By operating on a rasterized

G-Buffer, our approach is independent of the representation

used for the scene itself. Our technical contributions are

an efficient parallel quad generation, merging, and packing

strategy for proxy views that cover potential client

movement in a scene; a packing and encoding strategy

allowing masked quads with depth information to be

transmitted as a frame coherent stream; and an efficient

rendering approach that takes advantage of modern hardware

capabilities to turn our QuadStream representation into

complete novel views on thin clients. According to our

experiments, our approach achieves superior quality compared

both to streaming methods that rely on simple video data and

to geometry-based streaming.",

month = dec,

journal = "ACM Transactions on Graphics",

volume = "41",

number = "6",

issn = "1557-7368",

publisher = "ASSOC COMPUTING MACHINERY",

keywords = "streaming, real-time rendering, virtual reality",

URL = "https://www.cg.tuwien.ac.at/research/publications/2022/hladky-2022-QS/",

}