Information

- Publication Type: Journal Paper (without talk)

- Workgroup(s)/Project(s):

- Date: April 2022

- DOI: 10.1016/j.cag.2022.04.013

- ISSN: 1873-7684

- Journal: Computers and Graphics

- Open Access: yes

- Pages: 12

- Volume: 105

- Publisher: Elsevier

- Pages: 73 – 84

- Keywords: Concept spaces, Latent spaces, Similarity maps, Visual exploratory analysis

Abstract

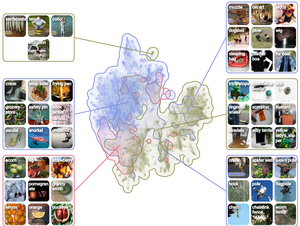

Similarity maps show dimensionality-reduced activation vectors of a high number of data points and thereby can help to understand which features a neural network has learned from the data. However, similarity maps have severely limited expressiveness for large datasets with hundreds of thousands of data instances and thousands of labels, such as ImageNet or word2vec. In this work, we present “concept splatters” as a scalable method to interactively explore similarities between data instances as learned by the machine through the lens of human-understandable semantics. Our approach enables interactive exploration of large latent spaces on multiple levels of abstraction. We present a web-based implementation that supports interactive exploration of tens of thousands of word vectors of word2vec and CNN feature vectors of ImageNet. In a qualitative study, users could effectively discover spurious learning strategies of the network, ambiguous labels, and could characterize reasons for potential confusion.Additional Files and Images

Weblinks

- paper

Link to open access paper - online demo

- Entry in reposiTUm (TU Wien Publication Database)

- DOI: 10.1016/j.cag.2022.04.013

BibTeX

@article{grossmann-2022-conceptSplatters,

title = "Concept splatters: Exploration of latent spaces based on

human interpretable concepts",

author = "Nicolas Grossmann and Eduard Gr\"{o}ller and Manuela Waldner",

year = "2022",

abstract = "Similarity maps show dimensionality-reduced activation

vectors of a high number of data points and thereby can help

to understand which features a neural network has learned

from the data. However, similarity maps have severely

limited expressiveness for large datasets with hundreds of

thousands of data instances and thousands of labels, such as

ImageNet or word2vec. In this work, we present “concept

splatters” as a scalable method to interactively explore

similarities between data instances as learned by the

machine through the lens of human-understandable semantics.

Our approach enables interactive exploration of large latent

spaces on multiple levels of abstraction. We present a

web-based implementation that supports interactive

exploration of tens of thousands of word vectors of word2vec

and CNN feature vectors of ImageNet. In a qualitative study,

users could effectively discover spurious learning

strategies of the network, ambiguous labels, and could

characterize reasons for potential confusion.",

month = apr,

doi = "10.1016/j.cag.2022.04.013",

issn = "1873-7684",

journal = "Computers and Graphics",

pages = "12",

volume = "105",

publisher = "Elsevier",

pages = "73--84",

keywords = "Concept spaces, Latent spaces, Similarity maps, Visual

exploratory analysis",

URL = "https://www.cg.tuwien.ac.at/research/publications/2022/grossmann-2022-conceptSplatters/",

}

video

video