Information

- Publication Type: Journal Paper with Conference Talk

- Workgroup(s)/Project(s):

- Date: October 2021

- Journal: Computer Graphics Forum

- Volume: 40

- Open Access: yes

- Lecturer: Stefan Sietzen

- Event: Pacific Graphics 2021

- DOI: 10.1111/cgf.14418

- Call for Papers: Call for Paper

- Pages: 12

- Publisher: John Wiley and Sons

- Conference date: 18. October 2021 – 21. October 2021

- Pages: 253 – 264

- Keywords: Computer Graphics and Computer-Aided Design

Abstract

While convolutional neural networks (CNNs) have found wide adoption as state-of-the-art models for image-related tasks, their predictions are often highly sensitive to small input perturbations, which the human vision is robust against. This paper presents Perturber, a web-based application that allows users to instantaneously explore how CNN activations and predictions evolve when a 3D input scene is interactively perturbed. Perturber offers a large variety of scene modifications, such as camera controls, lighting and shading effects, background modifications, object morphing, as well as adversarial attacks, to facilitate the discovery of potential vulnerabilities. Fine-tuned model versions can be directly compared for qualitative evaluation of their robustness. Case studies with machine learning experts have shown that Perturber helps users to quickly generate hypotheses about model vulnerabilities and to qualitatively compare model behavior. Using quantitative analyses, we could replicate users' insights with other CNN architectures and input images, yielding new insights about the vulnerability of adversarially trained models.Additional Files and Images

Additional images and videos

teaser:

Activations and feature visualizations for neurons associated with complex shapes and curvatures in layer mixed4a in the standard model. Note how rotating the input model causes activation changes for oriented shape detectors.

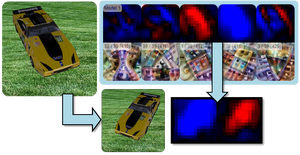

teaser:

Activations and feature visualizations for neurons associated with complex shapes and curvatures in layer mixed4a in the standard model. Note how rotating the input model causes activation changes for oriented shape detectors.

Additional files

supplementary document :

Additional use cases and detailed reports from the case study

supplementary document :

Additional use cases and detailed reports from the case study

Weblinks

- online tool

Perturber online tool for interactive analysis of CNN robustness - Entry in reposiTUm (TU Wien Publication Database)

- Entry in the publication database of TU-Wien

- DOI: 10.1111/cgf.14418

BibTeX

@article{sietzen-2021-perturber,

title = "Interactive Analysis of CNN Robustness",

author = "Stefan Sietzen and Mathias Lechner and Judy Borowski and

Ramin Hasani and Manuela Waldner",

year = "2021",

abstract = "While convolutional neural networks (CNNs) have found wide

adoption as state-of-the-art models for image-related tasks,

their predictions are often highly sensitive to small input

perturbations, which the human vision is robust against.

This paper presents Perturber, a web-based application that

allows users to instantaneously explore how CNN activations

and predictions evolve when a 3D input scene is

interactively perturbed. Perturber offers a large variety of

scene modifications, such as camera controls, lighting and

shading effects, background modifications, object morphing,

as well as adversarial attacks, to facilitate the discovery

of potential vulnerabilities. Fine-tuned model versions can

be directly compared for qualitative evaluation of their

robustness. Case studies with machine learning experts have

shown that Perturber helps users to quickly generate

hypotheses about model vulnerabilities and to qualitatively

compare model behavior. Using quantitative analyses, we

could replicate users' insights with other CNN architectures

and input images, yielding new insights about the

vulnerability of adversarially trained models. ",

month = oct,

journal = "Computer Graphics Forum",

volume = "40",

doi = "10.1111/cgf.14418",

pages = "12",

publisher = "John Wiley and Sons",

pages = "253--264",

keywords = "Computer Graphics and Computer-Aided Design",

URL = "https://www.cg.tuwien.ac.at/research/publications/2021/sietzen-2021-perturber/",

}

paper

paper video

video