Eulerian Video Magnification

By Galya Pavlova

This is an implementation of the paper "Eulerian Video Magnification for Revealing Subtle Changes in the World" by Wu et al. The main idea is to emphasize subtle changes in motion and/or color in a given video. This is done by first applying a spatial filter on the video sequence, afterwards a temporal bandpass filter is applied to select the interesting frequencies, and finally the filtered video is magnified and added to the original.

Implementation & Requirements

The implementation is done using Python and OpenCV, unlike the implementation by the authors of the paper in MATLAB. There are two main approaches used, based on what the user wants to magnify - either motion magnification or color magnification. For the motion magnification a Laplace pyramid is build and afterwards a first order Butterworth bandpass filter is applied. For the color magnification first a Gaussian pyramid is build and afterwards a temporal ideal filter is applied by using a discrete fourier transtormation. In both approaches the filtered video is multiplied by a magnification factor. For the motion magnification a spatial attenuation factor is used additionally to reduce the impact of the higher spatial frequencies, where the user can choose between linear attenuation, i.e. gradually reducing the magnifiation fator to zero, or directly cutting the magnification factor to zero. Due to color artifacts the video is processed in the YIQ color space and then transformed back to original RGB. In this step the user can specify a chrominance attenuation factor, which is applied to the I and Q components. The processing of the video takes a while due to the high computational algorithms. A user-friendly GUI is created with PyQt.

The source code was tested on both Windows 10 and Linux Mint. It requres Python 3, OpenCV 3.4 for the spatial decomposition, SciPy for the temporal filter and PyQt5 for the GUI. An autogenerated documentation can be found under the Documentation section. For both Linux and Windows OS there is additionally a standalone application, which does not require the source code. Otherwise the program can be started from the "main.py" file in the source folder. In both packages there is an additional folder, which provides some of the videos, used by the paper's authors.

How to use

To start the Windows application navigate to "evm-app" folder and start the "main.exe" file. In Linux navigate to "evm-app" folder and in a terminal type "./main". For reference parameter please see the original paper, where you will find a table with parameter values used by the authors.

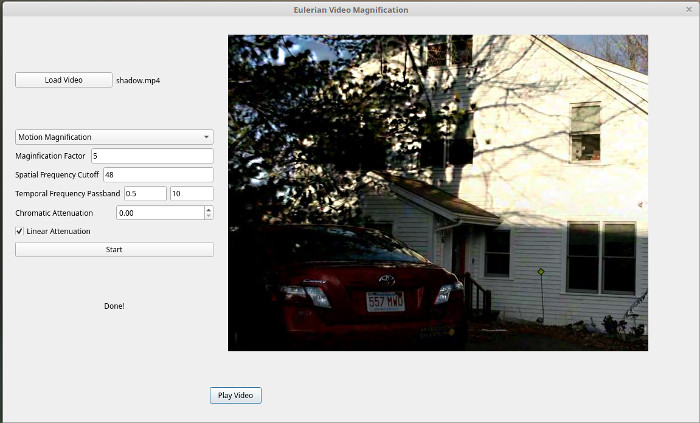

On starting the program an empty GUI appears. On the left is the section where the user can choose the parameters. First, by clicking the "Load" button the user specifies the video to be processed, the name of the video appears next to the button. Afterwards the user specifies the parameters and clicks on the "Start" button. After a while beneath the "Start" button a text "Done!" appears, which indicates that the program is done and the output video is saved in the same folder where the program is. If the user wishes to see the resulted video, he can click on the "Play" button and the new video will be played.

Results & Improvements

As we can see from the example below, the implementation of the paper "Eulerian Video Magnification for Revealing Subtle Changes in the World" prooves the results stated there. In the filtered video (right) the heartbeat of the baby is definately more visible in comparisson to the original video.

The original video

The magnified video

The program works very good for magnifying motion with very low noise and color artifacts. For the color magnification the results are not as good as the ones from the paper's authors. A suggestion for improvement would be to implement a mask, as mentioned in the paper. This way the user could specify the region that has to be magnified and the color artifacts will not affect the background of the video.

Another improvement would be to optimize the processing time. However, this was not a goal of this project, since the main idea was to reproduce the methods described in the paper.