|

Number5

Visualisierung 2 Project - Florian Schober (0828151, f.schober@live.com), Andreas Walch (0926780, walch.andreas89@gmail.com)

|

|

Number5

Visualisierung 2 Project - Florian Schober (0828151, f.schober@live.com), Andreas Walch (0926780, walch.andreas89@gmail.com)

|

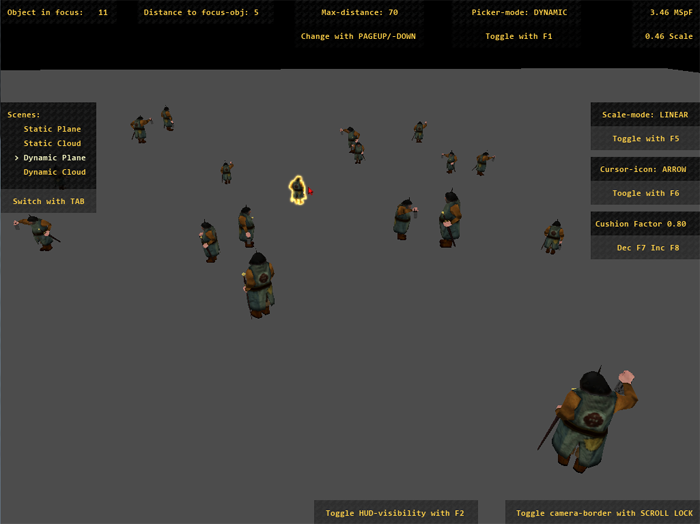

The paper addresses the difficulties to accurately pick an object in a 3D environment. By manipulating the cursor acceleration, in respect to the distance to the next pickable object, the user shall be able to hover over the desired object in short time. Therefore, the travelled distance in visual-space (movement on the screen) and motor-space (movement of the input device) are decoupled. On the one hand movements in visual-space close to pickable object can now be slowed down, while on the other hand movements in areas without any pickable object can now be accelerated.

Every frame the scene is aditionally (in the same rendering-pass) rendered to an id-buffer. This id-buffer stores numerical ids of all objects in the scene (where 0 represents the background or non-pickable objects). When needed this id-buffer is (partially) read from the graphics-memory to the RAM and reorganized using an acceleration structure. Every frame we search for the nearest neighbour to the cursor-position in screen-space (using the acceleration structure) and store its id and its distance to the cursor. The cursor movement until the next frame is then manipulated using our scaling function. At the end of the frame we provide visual feedback in two ways: Highlighting the next pickable object to the cursor, and changing the mouse color according to its distance to the next pickable object.

When the scene is rendered, it is aditionally rendered to a second render-target (in the same rendering-pass). In our case this is a 16-bit unsigned int texture. We decided to use a texture instead of the stencil-buffer since consumer hardware usually only provides 8-bit stencil-buffers. Our implementation can use as much bits per id as the graphics-card is able to store in a texture. 16-bit were sufficient for our purposes.

To be able to find the closest pickable object to the cursor, we use (as suggested in the paper by Elmqvist and Fekete) a quadtree to accelerate the nearest neighbour search. The quadtree holds an image-pyramid where the lowest level (the biggest level) is the id-buffer-part which was read to the RAM. All other layers are built up by checking whether the pixels underneath them are zero or not. If at least one is not zero they are 1 else they are 0. This way the top level contains only one pixel, which represents the whole scene (if it is 0 there is no pickable object in the screen).

When the quadtree is used for the nearest-neighbour search, it is given a position where to look (usually the cursor position). The quadtree then traverses from top to bottom, eliminating all areas that contain no information or are too far away. To compute the distance between a pixel and the given position, the euclidian distance is used.

We implemented two types of what we call update-modes:

reads back the whole id-buffer every time the scene changes (which means that projection-matrix, view-matrix or any object in the scene-graph changes). This update-mode is supposed to be fast for static scenes, since they only have to be read once.

only reads back the area around the cursor, but every frame. The size of the area is defined by the maximum-pickable-distance. This update-mode is supposed to be fast for dynamic scenes where the acceleration structure whould need to be updated every frame.

As suggested by Elmqvist and Fekete two different approaches of manipulating the cursor movements have been implemented. The first scale function simply uses a linear approach, while the second scale function is based on an inverse power function.

simply interpolates, based on the distance, between a given lower- and upper-bound. Therefore, the cursor movement is slowed down to the lower-bound value, while hovering over a click-able object. By moving the cursor away from a pickable object, the cursor movements starts to interpolate until reaching the upper-bound at the end of the influence zone. This scale function reduces the cursor movement quite fast, while crossing an area of pickable objects.

is based on an inverse power function. This results in a quite aggressive behaviour, while being really close to a click-able object. Additionally, the inverse scale function does not accelerate the cursor movement as much as the linear scale function.

To gain a better understanding how the scale function affects the cursor movement, and to give a more intuitive feeling of which object is currently in focus we implemented two types of visual feedback.

A simple approach is to modify the cursor-icon itself. Therefore the color changes from the standard white-filled icon to a red one. The user now becomes aware, that the cursor is affected by the scale function. To enhance the feeling of having a high precision the cursor-icon scales down, while coming closer to a click-able object. Especially, while hovering over many small click-able objects within a small area, a smaller cursor-icon does not occlude that much as an normally scaled one. To give the user an additional feedback that a the cursor is right above a pickable object, it will start blinking. The user has also the possibility chose between two common cursor-icons (arrow or hand).

The currently focused object (the object nearest to the cursor) is highlighted in the scene using edge-glow. This way the user has a better idea what he picks.

Our implementation provides two different scenes, to show the importance of using an appropriate data structure to access the object closest to the cursor. The first scene is static (no movements in the scene), while the second scenario is dynamic (animated objects in the scene). For testing we use the standard parameters of our program.

We tested our code on a Microsoft Windows 7 Professional operating system.

Our test machine had the following specifications: IntelCore-i5 with3.4GHz, 8GB RAM, GeForceGTX760. To test we used the fullscreen-mode with a 1920x1080 resolution.

| Static-update | Dynamic-update | |

|---|---|---|

| Static-scene | 1.5 MSpF | 3.5 MSpF |

| Dynamic-scene | 10 MSpF | 3.5 MSpF |

1.8.7

1.8.7