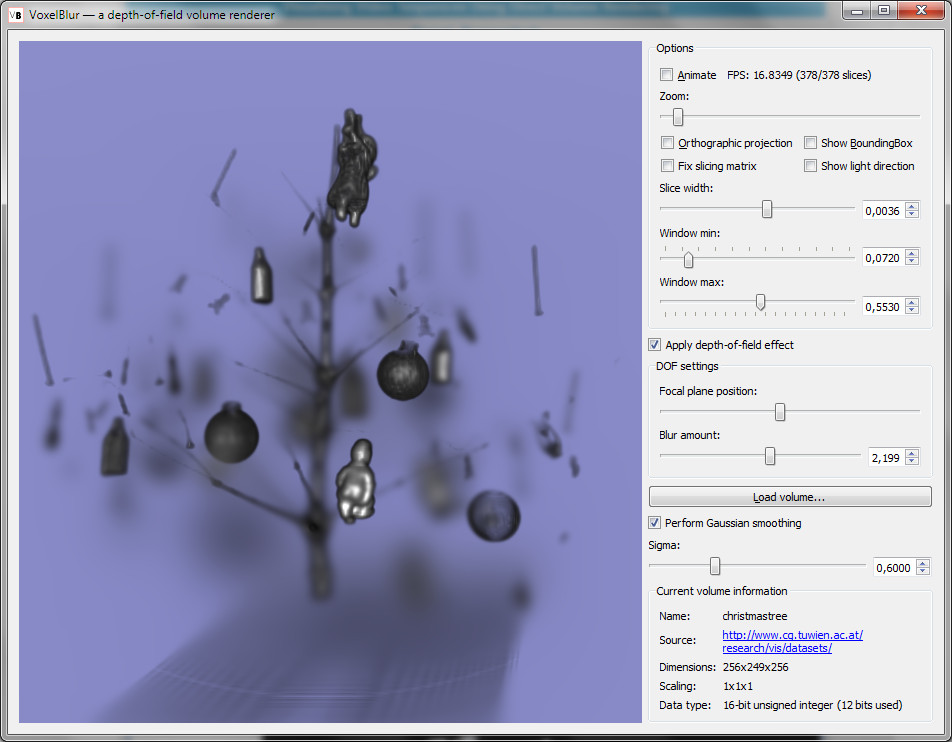

Screenshot of the application

Introduction

The VoxelBlur application was created by me, Florian Schaukowitsch, as a lab exercise during the course Visualisierung 2 (Visualization 2) at the Vienna University of Technology.

The application is an implementation of the ideas presented in the paper Depth of Field Effects for Interactive Direct Volume Rendering by Schott et al.

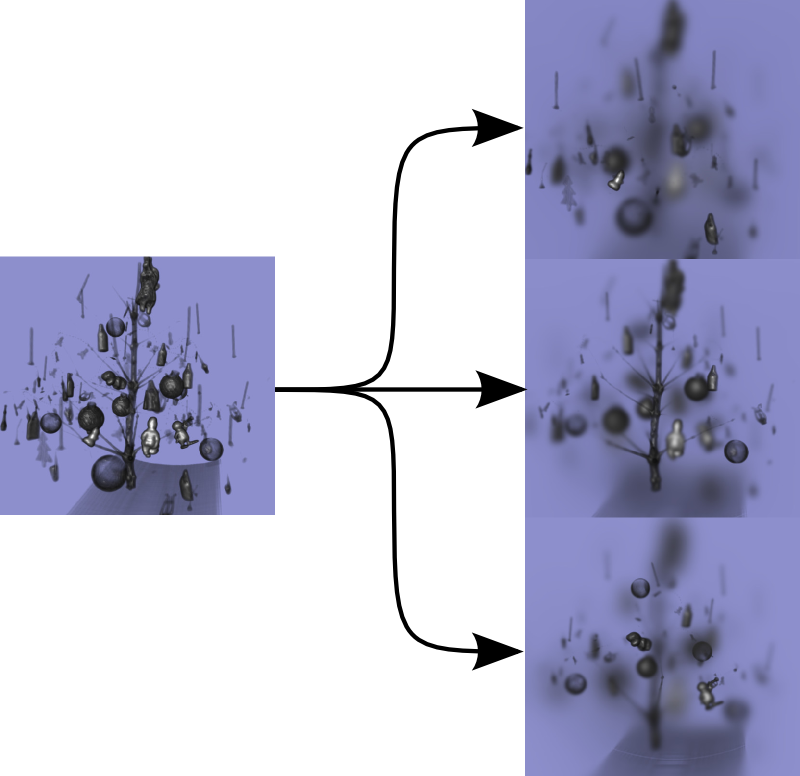

VoxelBlur is an interactive direct volume rendering program with supports loading 8/16 bit raw volumes using a simple metadata format (a selection of example volumes is included in the downloads). The main feature of the volume renderer is that it provides a depth-of-field effect, which selectively "blurs the voxels" depending on the distance to a predefined focus plane, simulating the effects of a real-world camera lens system. In the user interface, the focus plance can be varied and the strength of the effect changed. Try loading an example volume and bringing different parts of the volume into focus, blurring out the rest of the volume. Use the left mouse button to rotate the volume, and the right mouse button to rotate the light. Zoom with the mouse wheel. The effects when changing the focal plane can also seen below: one can focus on items in front, behind or on the trunk of the christmas tree.

Results of different focal plane settings

The advantage of the depth-of-field effect is that it enhances depth perception for us human viewers, and it also provides a way to better separate the focus from the context.

Background

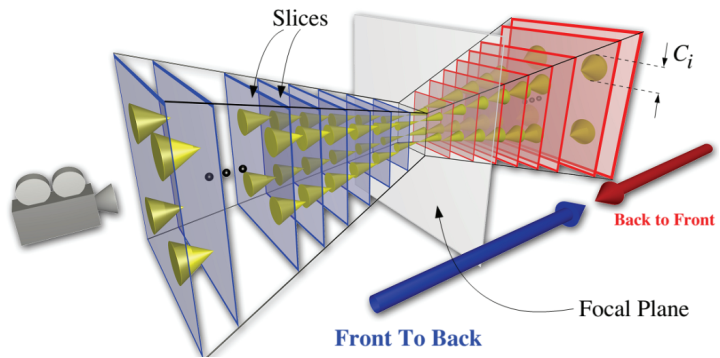

The renderer is based on the slicing volume rendering method: several view-aligned planes, with varying distance to the camera, are intersected with the volume's bounding box to create a polygon of the intersection. This polygon is tesselated into triangles, and drawn with standard rendering methods. The slice shader can evaluate the volume and apply shading, transfer functions etc. The result of all slices has to be blended together, this usually happens in back-to-front order. An overview about this type of volume rendering can also be found here, courtesy of Nvidia.

Schott et al. extend this model to allow for the depth-of-field effect. The image below this paragraph explains the basic process. The slices are divided into 2 sets: the ones in front of the focal plane, and the ones that are behind it. The slices in front are processed in front-to-back order, the ones in the back in the reverse back-to-front order. Each side has an intermediate buffer, which contains the merged image for its side. In each step, the image obtained for each slice is combined with what is already present in the intermediate buffer. Sampling from the intermediate buffer is blurred during this merging: the circle of confusion obtained from the camera parameters is transformed into the clip-space, which is then used to sample the previous buffer at multiple points around the original point, resulting in a blur effect. The yellow cones in the image below represent this area sampling. Afterwards, the 2 intermediate buffers for each side are combined to produce a final output image.

Applying the blur effect by separating back and front slices

Implementation

The application is written in C++, using the cross-platform Qt framework in version 5.2. Rendering is implemented using modern OpenGL (mostly 3.3 core profile + compute shaders for preprocessing). A simple, custom metadata format was used to describe the raw volume data: it supports arbitrary sizing in each dimension, 8 or 16 bit integers, byte skipping and gzip-compressed volumes. After loading the volume into a 3D texture, it is preprocessed using OpenGL compute shaders. 3D Gaussian convolution (strength adjustable in interface) is used to obtain a volume with reduced noise and smoother surfaces. Another compute shader calculates the Gradients and optionally rescales the data to fit into the [0,1] range (handy for the common 12-bit volumes, where each voxel is stored in 2 bytes, but only uses the value range of 12 bits).

The volume slicing process happens in application code on the CPU. Care was taken to make the GPU work more asynchronously by performing manual sync for the data upload of each slice, increasing the total performance somewhat compared to when we let the driver take care of synchronization. A slice shader evaluates the volume, performs data windowing and shading using a directional light, with the Blinn-Phong lighting model using the data gradients as normals. It was planned to include user-definable transfer-functions, but time constraints unfortunately forced me to leave that out.

The depth-of-field effect is implemented with the help of OpenGL framebuffer objects. Back-to-front compositing benefits from native GPU blending, but the FTB compositing requires a custom blending method and therefore support from a shader. For blurring, 4 samples are taken on the radius of the circle of confusion and averaged. This provides a reasonable result, with relatively good performance. The increase of the blurring effect with increasing distance is governed by a user-defined "strength" parameter, as explained in the paper.

Downloads & Source

Note that the application was only tested on Windows 7 64bit, with an AMD HD 5850 graphics card. It should run on any graphics card supporting compute shaders. This includes the "DirectX 11" cards, with a reasonably current driver (at least mid-2013 for AMD).The source code is provided with a Visual Studio 2012 project. It has no other library dependencies apart from Qt 5.2. Theorethically, it should compile on any Qt-supported platform, but unfortunately I could not test that.

The downloads require the free 7-zip archiver to decompress.