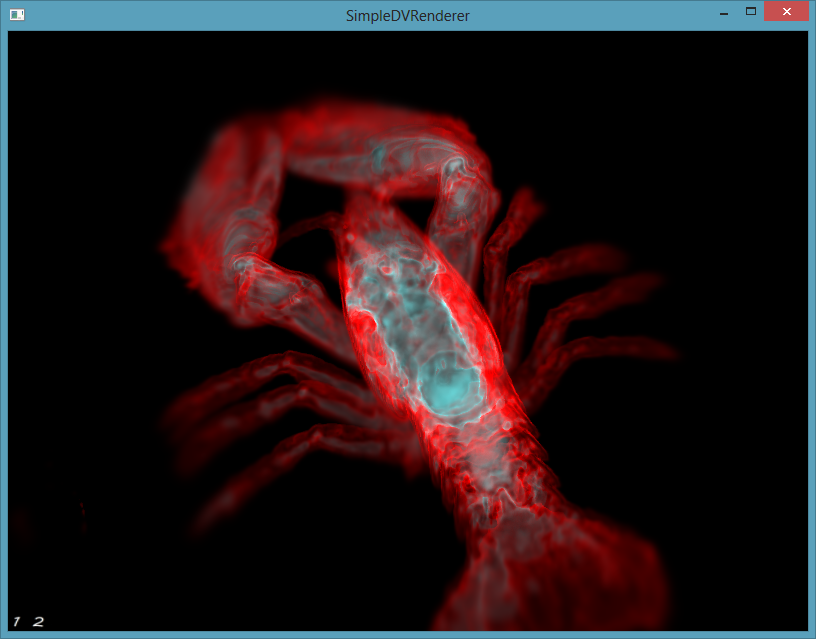

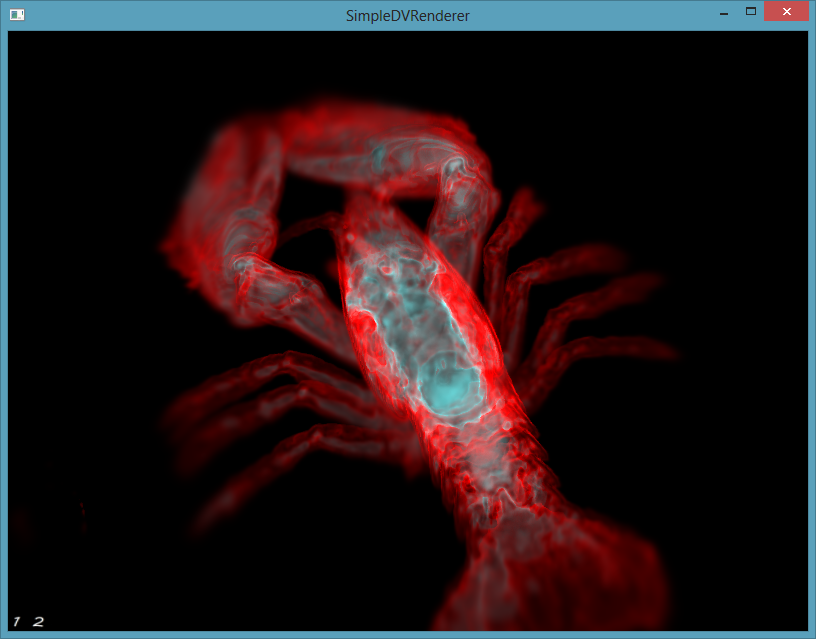

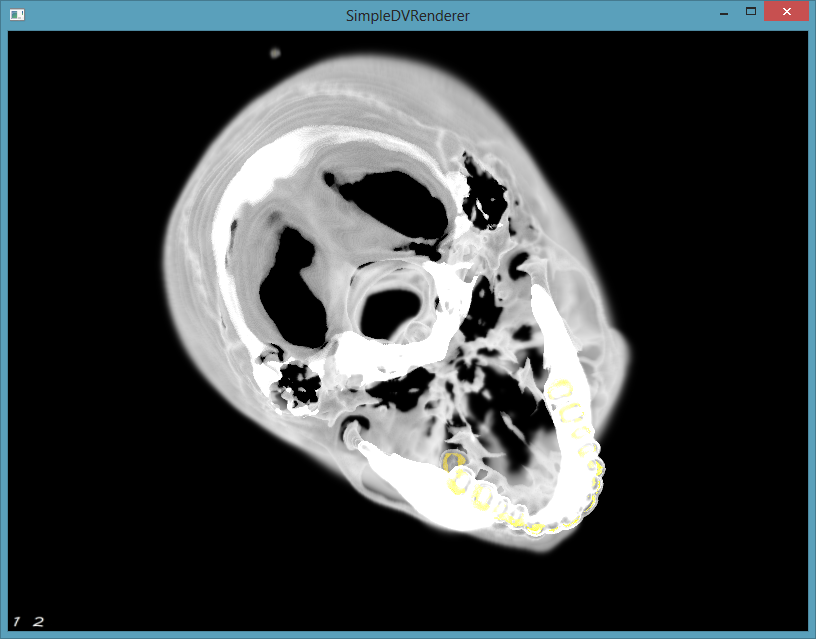

- now with Depth of Field -

This program was developed during the course of Visualization II in summer term 2013 by Markus Toepfer. The goal was to implement a Depth of Field effect in a slice based volume renderer, based on the paper “Depth of Field Effects for Interactive Volume Rendering” by Schott et al.

What is Depth of Field, and how to achieve it?

DoF is originally used in photography, when using a lense to capture an image only a certain part of the image is “in focus” (sharp in the image). Parts “out of focus” are blurred. The more a area is further away from the focus plane the more blurred this part gets. This effect creates a “circle of confusion” which describes the shape and size of the blurring, and can be calculated by a geometrical model with help of aperture and the focal length of the lense, and the distance to the object. The calculation can be done either in the view space (the world) or the image space (on the camera sensor). The magnification of the projected image is determined by the distance of the image place, the focal length of the lense and the distance of focus.

In the paper is also suggested to remove the option to change the lense parameters (aperture, focal length and focal distance) for the sake of usability since the circle of confusion is proportional to the distance between the object and the focal distance. The focus should be a user specified point in model space. When the camera position/orientation changes the focal distance is also changed. The change of the aperture is made as a function depending on the focal distance and a scalar with which states the rate of increase with the distance to the focal plane of the circle of confusion. With this simplification for the user the same region should stay all the time in focus (as long the user does not willingly change the area in focus).

Integration into a slice based renderer.

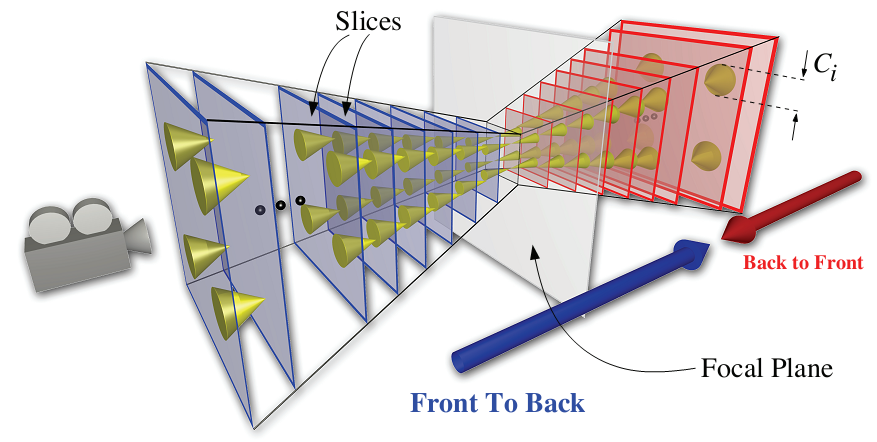

Out of the 3D scalar field a proxy geometry of slices is created by intersecting planes with the bounding box of the volume. A fragment program computes color and opacity of the slices.

This slices are then partitioned into slices in front of the focal plane and slices behind it. Slices in front are put in two intermediate buffer and traversed from front to back slice by slice in which the blurring by the DoF is approximated til the focal plane is reached. This is done by successively swapping buffers. Slices behind the focal plane are traversed from back to front in the eye buffer, and in the last step blended with the last intermediate buffer of the planes in front of the focal plane.

The DoF approximation is done with Incremental Filtering between the buffers (slices).

The blurring is achieved by averaging samples from the previous intermediate/eye buffer in a neighbourhood around the current fragment. In the implementation the authors used a perspective projection matrix to compute the image space circle of confusion for a given slice while another matrix does the mapping of the clip space to the texture coordinate space. This was later then simplified by a second model just depending on a value scaling the blurring linearly with the distance.

In the fragment shader the sample offset of the current fragment position for the circle of confusion is calculated as a regular grid of user specified resolution for a reasonable compromise between performance and image quality. In my implemented i used a 5x5 grid while the authors used a 2x2. Samples are taken from the previous intermediate buffer and averaged. On one buffer the slice is rendered and blended with the other which is used as a texture for blurring, than the buffers are swapped and the before used as texture source is cleared and now used as rendertarget.

To handle the slice borders correctly the slices needs to be scaled by the circle of confusion diameter, which is done in the vertex shader.

After that the buffers/slices are swapped and the next slice is worked on.

(Image taken from the paper)

In the end the intermediate buffer is then blended on top of the eye buffer using the over operator to archive the final framebuffer image.

Implementation Notes:

The implementation was done in C++ with OpenGL 3, using Qt5 for the window handling the the OpenGL Context.

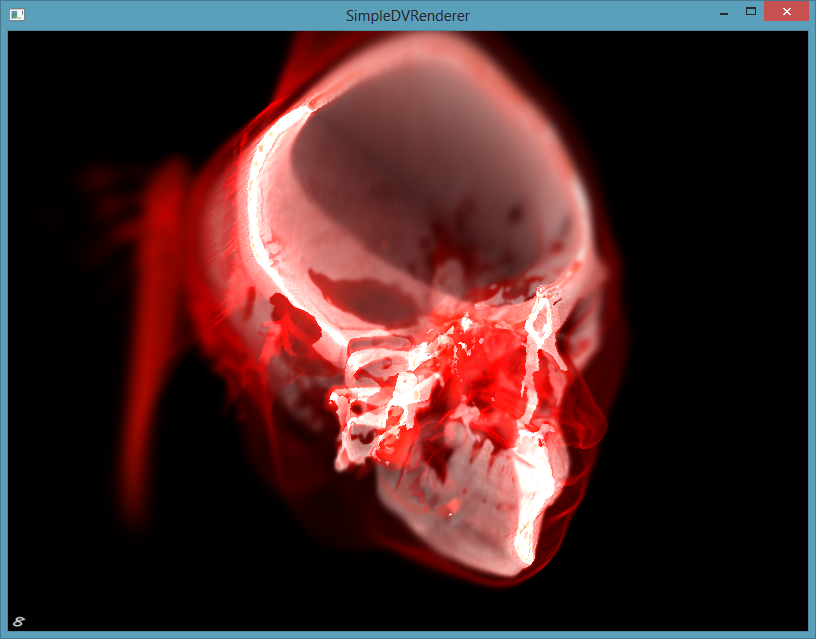

The first point was to implement a Volume Renderer, using raycasting and sampling of the volume, and implementing a Transfer Function which is sampled as a texture for a lookup of the volume sampled density values. I implemented different methods (front-to-back, back-to-front, maximal density, average density, first hit) purely in shaders.

To get the slices I sample the volume in specific distances, also done a shader. In the implemented of the Depth of Field Effect the slices are also created on the fly.

Visualization 2 :

Depth of Field for Interactive Volume Rendering