Speaker: Nidham Tekaya

Abstract

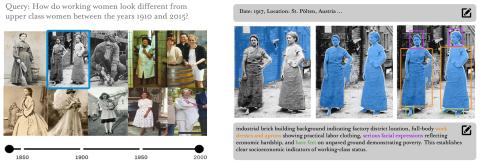

The analysis of cultural heritage through visual media faces a fundamental challenge: Computer Vision methods struggle to model and represent context in visual media. They primarily focus on identifying visual content such as objects, people, and scenes but fail to understand how their interpretation and significance evolves across time and different historical periods.

This thesis addresses this limitation by developing computational methods for interactive learning and analysis of evolving visual context in historical image collections, recognizing that the same visual entities can be interpreted in drastically different ways depending on their temporal and social settings. Applied primarily to cultural heritage and social phenomena, we propose computer vision and visual analytics methodology that will enable users to explore contextual relationships in large-scale image collections, analyze contextual changes over time, and steer the analytics process through iterative user feedback.

The envisioned methodology will integrate temporal modeling using vision-language models to capture temporal aspects, contribute novel context representations, investigate methods for the retrieval and exploration of context and finally propose interactive refinement mechanisms that allow domain experts to guide and adapt the analysis system towards their individual interest. The developed approaches shall support domain experts in investigating specific research questions with a temporal or historical dimension in large-scale cultural heritage data.