3D Insight Cutaway Renderer

Cutaway Renderings address the problem of occlusions of important objects in a 3D scene. Simply removing the occluding object is not an option, if context is important. Removing only portions of the occluder creates a full view of the object, while the spatial relationship between the object and the occluder is preserved. The paper "Adaptive Cutaways for Comprehensible Rendering of Polygonal Scenes" by Burns et. al. describes an efficient way to create these cutaway renderings in real-time

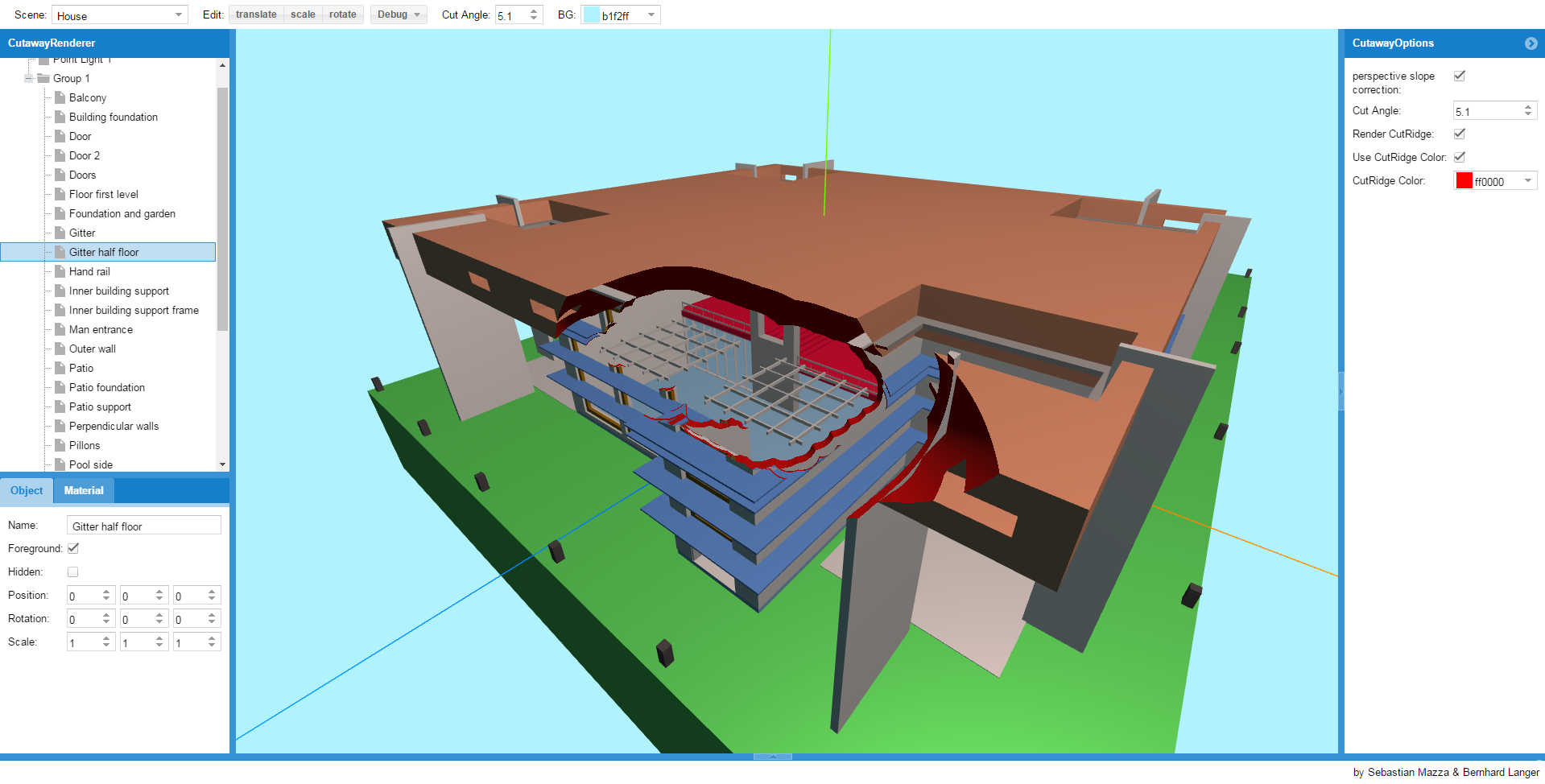

Interface and functions

Our implementation allows the user to create these cutaway renderings in web based form using WebGL. The user can open and manipulate different scenes (such as object postion, scale, color, etc.) and select objects of interest. The program layout is divided into the main 3D rendering in the middle, scene graph and object attributes on the left and debug options on the right.

Quick example

- Open application using the "APP" button above

- Select scene "House" in the "Scene" selection on the top left of the program

- The segments of the scene are found on the left in the "Group 1" container

- Choose object of interest (e.g. stairs) and check "Foreground" in the "Object" tab below

- Zoom in the scene using the mouse wheel

- Rotate scene with left mouse button

Cutaway Basics and Theory

The cutaway window is defined by a cutaway surface that originates from the objects hull (from a specific view) and opens similar to a cone to the viewers direction. Everything inside this cutaway surface is removed and a direct view to the object is created. However the mesh data of the object doesn't have to be manipulated to create these cuts. It is sufficient to discard the fragments inside the cutaway surface in the shading stage. Therefore only the view-dependent depth information of the cutaway surface is used to deside which fragments are rendered.

Algorithm to create the cutaway

The first step of the implementation is to render the object of interest into a depth image. The depth information of the cutaway surface is then computed with a distance transformation (in terms of a greyscale image operation) on the depth image. In our implementation we used the "jump-flood" algorithm to compute the distance transformation in parallel as described in the paper. In the fragment shader we then use the result of the distance transformation for an additional depth-test, to discard occluding fragments. Since discarding fragments of solid occluder objects creates holes in their meshes, we also want to fill these holes with a surface created by the cut. Similarly we don't have to actually create a new mesh to achieve this. Instead we simply apply an additional rendering step on the exposed backfaces of the occluders, by calculation the cutaway surface normal and light direction at each fragment.

Reference Paper:

Burns, M., & Finkelstein, A. (2008, December). Adaptive cutaways for comprehensible rendering of polygonal scenes. In ACM Transactions on Graphics (TOG) (Vol. 27, No. 5, p. 154). ACM. Links: