Introduction

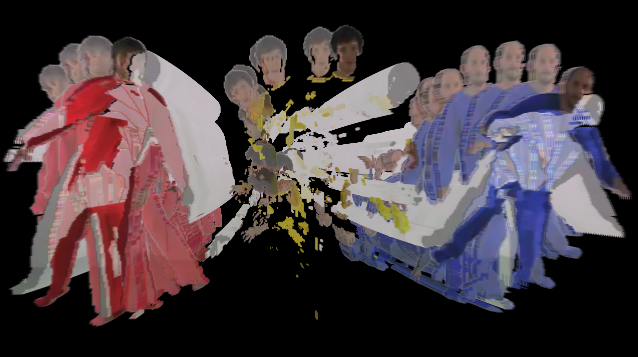

We have implemented a Direct Volume Rendering (DVR) approach for visualizing video sequences. The idea is based on the scientific paper "Visualising Video Sequences Using Direct Volume Rendering" by Daniel and Chen [1]. Video sequences are summarized by visualizing the whole sequence as a three dimensional object, where time is specified as the third dimension. Direct volume rendering is used to enable insights into the volumetric video block. Thus, a reasonable way to set the opacity of each voxel value has to be found. Our implementation offers 3 different options:

- Background Subtraction (non-changing background elements are set transparent)

- Saliency Detection (only salient areas in each frame are set opaque)

- Hue Selection (transparency based on specified hue value)

Implementation

The project was implemented in C++, utilizing the computer graphics library OpenGL for rendering and the computer vision library OpenCV (2.4.5) for video processing. For the graphical user interface Qt (4.8.4) was used. For direct volume rendering a single-pass GPU raycasting approach was implemented.

The implementation differs from the presented approach in the paper in some aspects. Instead of the presented difference metrics, such as Y-NMSE or Y-DIF, a Gaussian Mixture-based Background/Foreground segmentation algorithm by Kaewtrakulpong and Bowden [2] was chosen, since it allows a more sophisticated background model (e.g. less sensitive to lighting changes). Additionally a saliency detection approach was included into the implementation. This allows another representation of the video by filtering out non-important elements of the scene. The 2D image-based algorithm by Montabone and Soto [3] was used for saliency detection. The code is publicly available at http://www.samontab.com/web/saliency/. Finally, instead of fixed views the user can interactively rotate around the video visualization and zoom in or out.

Usage

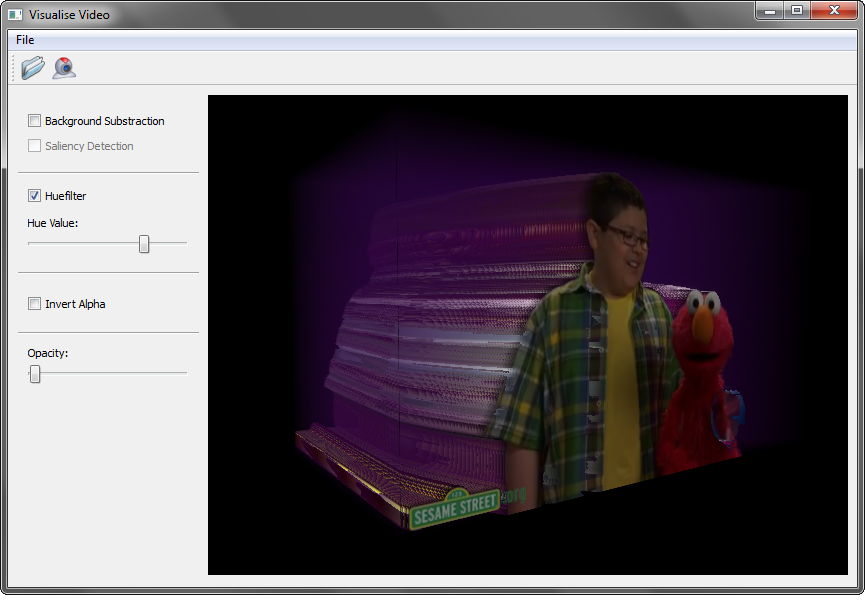

In the following screenshot the main window of the application is displayed. The user has two different options to load a video: from file system or from the webcam. These options can be selected by clicking either on the folder icon or on the webcam icon (alternatively selection via the menu bar is possible). After the video selection the visualization is be visible in the bottom-right black area. The camera (view point) can be moved by using the keys "W" (forward), "S" (backward), "A" (left) and "D" (right) as well as rotated by dragging the mouse. The transparency of video elements can be modified by the options on the left side of the area. Based on the initial selection either Saliency detection or Background segmentation can be activated. Further hue selection can be activated and a desired value of hue can be chosen by moving the slider. Further it is possible to invert the alpha channel (only if at least one of the selections is activated, otherwise nothing would be visible).

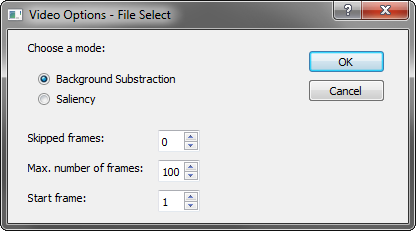

When loading a video some parameters have to be set. Besides the selection of the video file or webcam the user is able to choose between "Saliency Detection" or "Background Subtraction". Further, the maximum number of processed frames, the start frame and the number of skipped frames between two successive frames can be selected. The dialog is presented in the following image. After clicking the "OK" button the user either has to select the video file (if file selection was chosen) or the webcam will start capturing. Video processing can always be aborted by clicking the escape key. Thus, only the already processed files will be visualized.

Documentation & Downloads

- Source Code (incl. VS 2010 project)

- Executable (incl. DLLs)

- Doxygen Documentation

- Short Summary of the Chosen Paper

References

[1] G.W. Daniel and M. Chen. Visualising video sequences using direct volume rendering. In Proceedings of 1st International Conference on Vision, Video and Graphics (VVG2003), 103-110, Eurographics/ACM Workshop Series, Bath, July 2003.

[2] P. Kaewtrakulpong and R. Bowden. An improved adaptive background mixture model for real-time tracking with shadow detection. In Proceedings of 2nd European Workshop on Advanced Video Based Surveillance Systems, volume 5308, 2001.

[3] S. Montabone and A. Soto. Human detection using a mobile platform and novel features derived from a visual saliency mechanism. Image Vision Comput. 28, 3 (March 2010), 391-402, 2010.