Implementing Eulerian Video Magnification

Eulerian Video Magnification (EVM) [Wu et al., 2012] is a technique to make visible and enhance otherwise imperceivable changes in a video stream. It can for example be used to make visible the subtle colour change in the human face due to rhythmic differences in blood perfusion caused by the pulse. Besides enhancing colour, it can also be used to enhance subtle motions, for example the breathing motions of a baby.

For this course we implemented EVM as described in the the paper with c++, targeting the Linux and Mac platform. We implemented it as a simple command line tool, that is driven by a Python GUI where the user can select input and output video files, as well as design the used filter (IIR and FIR) and set the amplification factor.

Usage

The Program has a simple command line interface that is driven by a Python GUI. The positional arguments for the command line are as follows:

GUI

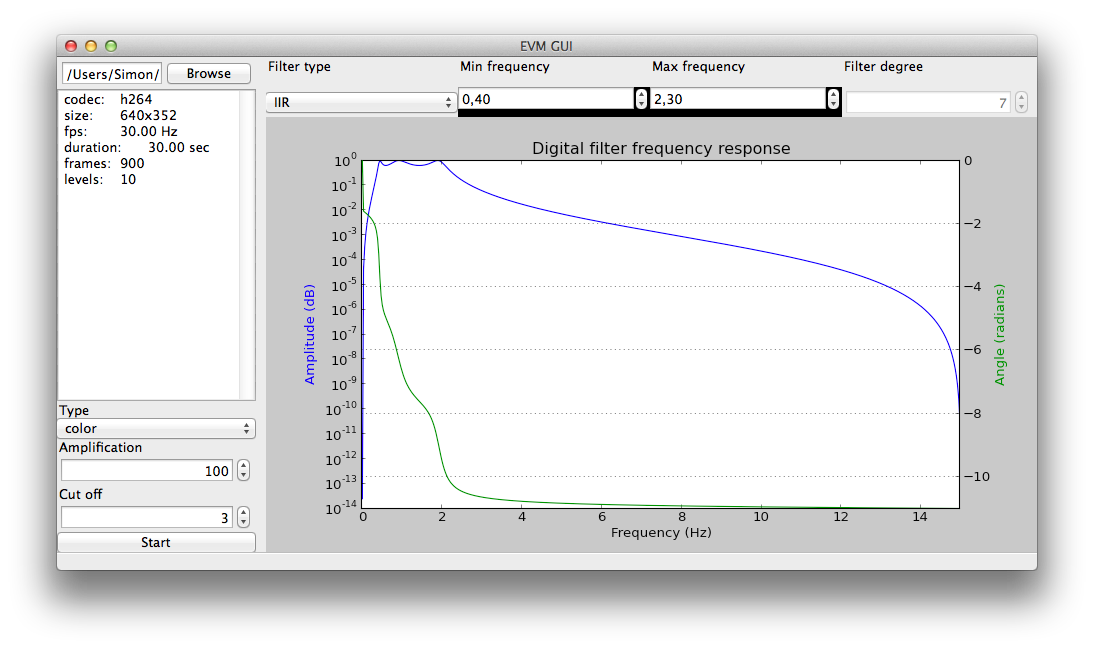

The Python GUI is an easy way to interface with the program, and it also offers a bandpass filter designer for the supported IIR and FIR filters.

Information about the video is show in the lop left panel and the parameters for the IIR or FIR filters can be chosen in the top bar. The frequency and phase response plots are updated accordingly to illustrate the current filter settings.

Magnification value (alpha), cutoff mode (motion or colour magnification) and cutoff level can be chosen to the lower left.

Results

Our implementation represents a solid implementation of EVM as it is found in the paper. We can reproduce and validate the reported results within the limits of our implementation.

Runtime performance was only a secondary goal, and our implementation runs relatively fast, but not fast enough for real time usage scenarios.

Discussion

Our results come close to the results from the original paper, and the remaining differences can probably be attributed to differences in the filters used.

Another difference comes from performing the computations in different colour spaces. For our implementation we use the RGB colour space, whereas the original implementation uses the YIQ colour space. This allows them to explicitly control the output chromaticity of the video and keep it within reasonable limits.

Filters

We implemented both IIR and FIR bandpass filters. Our tests showed that IIR performs much better, because FIR tends to lean towards a lowpass filter for small filter orders. This is problematic as the filter response is dominated by unwanted low frequencies, which lead to a too strong response and strong clipping when the original and the amplified filtered signal are combined together.

It is probably worthwhile to further investigate different filter classes and find good combinations for certain usage scenarios.

Known Issues

- Videos with a portrait aspect ratio are heavily distorted. This is most likely caused by a problem with libav.

- The output frame rate is fixed at 30fps. Reading the frame rate from the input videos and setting it on the output video is straightforward, but the different video formats support different frame rates which makes fps negotiation a non trivial problem. All test videos are either 1/29.97 or 1/30, so this should not be a problem.

- The reported frame count of the video and the progress bar differ slightly.

References

[Wu et al., 2012] Wu, H.-Y., Rubinstein, M., Shih, E., Guttag, J., Durand, F., and Freeman, W. T. (2012). Eulerian video magnification for revealing subtle changes in the world. ACM Trans. Graph. (Proceedings SIGGRAPH 2012), 31(4). http://people.csail.mit.edu/mrub/vidmag/

Acknowledgements/Remarks

A reference implementation using Matlab is available from the authors' website and a free online service for video magnification is also available.

As of June 7th 2013, EVM is marked as "patent pending" on the website.

The source videos for our results video haven been taken from the website assuming fair use for educational purposes.